A few months ago, I was stuck waiting for a traffic light to change while Google Maps took forever to update the route. My 4G signal was fine, but the lag nearly made me miss a turn. That’s when a friend riding with me casually said, “You know this wouldn’t happen if the processing was done right here instead of some distant data center.”

That moment led me down the rabbit hole of edge computing—a concept that’s not just technical jargon, but something quietly reshaping how the world works.

Here’s the thing: Edge computing brings data processing closer to where it’s generated. That one change is rewriting the rules for speed, efficiency, and real-time decisions.

What Is Edge Computing?

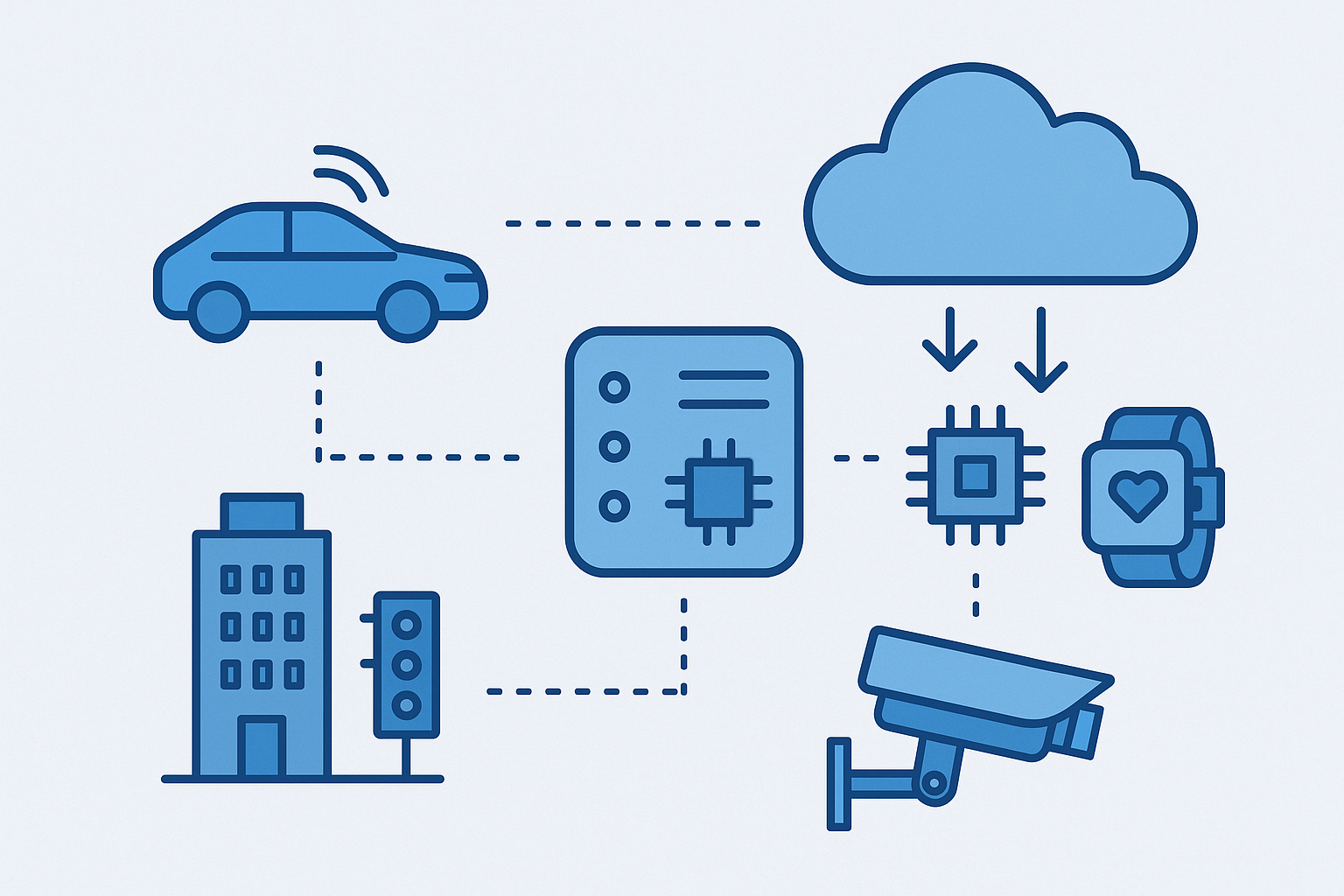

Edge computing means processing data near its source—at the “edge” of the network—rather than sending it all the way to a centralized cloud or data center.

Instead of your smart doorbell sending video footage to a faraway server for analysis, it processes footage locally and only sends relevant clips (like someone actually at the door) to the cloud.

The Core Idea:

- Traditional model: Devices → Cloud → Processing → Response

- Edge model: Devices → Nearby device/server → Processing → Response

This reduces latency, saves bandwidth, and speeds up decision-making.

Why It Matters

1. Speed

Think self-driving cars. A delay of even half a second can be the difference between safety and disaster. Edge computing allows instant decision-making without relying on distant servers.

2. Reduced Bandwidth Costs

Not everything needs to be uploaded. With edge, only important or compressed data is sent to the cloud. That means fewer costs and faster performance.

3. Reliability in Remote Areas

In places with weak or inconsistent connectivity—like offshore oil rigs or rural areas—edge computing keeps systems running even when cloud access is limited.

Real-World Use Cases

Smart Cities

Traffic lights that adjust in real time based on actual traffic. Noise sensors that detect illegal street racing. Edge computing powers all of that, instantly.

Healthcare

Wearables monitoring heart rate or blood sugar can alert doctors immediately if something’s off—without needing to wait for cloud sync.

Retail

In-store cameras analyze foot traffic and customer behavior on the spot to optimize shelf layouts and product placement.

Manufacturing

Machines on the factory floor detect issues and make micro-adjustments before things go wrong, thanks to local processing.

Cloud vs. Edge: A Quick Comparison

| Feature | Cloud Computing | Edge Computing |

|---|---|---|

| Processing Location | Centralized (data centers) | Local (near data source) |

| Latency | Higher | Very low |

| Bandwidth Usage | High | Low |

| Offline Capability | Limited | Often possible |

| Ideal For | Data storage, analytics | Real-time operations |

They’re not enemies—cloud and edge usually work together. The cloud stores and analyzes historical data, while edge handles what’s happening right now.

Challenges to Keep in Mind

- Security: More devices at the edge mean more points of vulnerability.

- Maintenance: Updating edge devices remotely can be tricky.

- Consistency: Syncing real-time edge data with centralized systems can get messy without proper protocols.

But despite these challenges, edge computing is growing fast—especially with the rise of 5G and AI-powered devices.

Final Thoughts

Edge computing isn’t some far-off futuristic idea. It’s already in your smart fridge, your delivery drone, and your city’s traffic system. As our world demands faster, smarter, and more responsive tech, the edge is exactly where the action’s heading.

Whether you’re a developer, a business owner, or just someone who hates buffering—you’re going to feel the edge. Literally.