AI in Underwriting: What’s Changing, What Works, What to Watch

I remember the first time I applied for insurance pages of forms, medical tests, follow-up calls. Weeks later, I finally got approved. Now imagine doing that in hours, or even minutes. That’s what AI promises in underwriting.

Here’s the thing: AI isn’t just speeding things up. It’s rethinking what underwriting could be more precise, more fair, but also riskier in some ways.

What Is Underwriting, and Why It’s Ripe for Disruption

Underwriting is the process where insurers, lenders, or others assess risk and decide who to insure (or lend to) and at what price. Traditionally this relies heavily on:

- manual review of documents

- actuarial tables and historical data

- expert judgement

Problems with the traditional model include delays, inconsistency, limited data sources, bias, and sometimes, missed insights. AI enters here to address many of these but imperfectly.

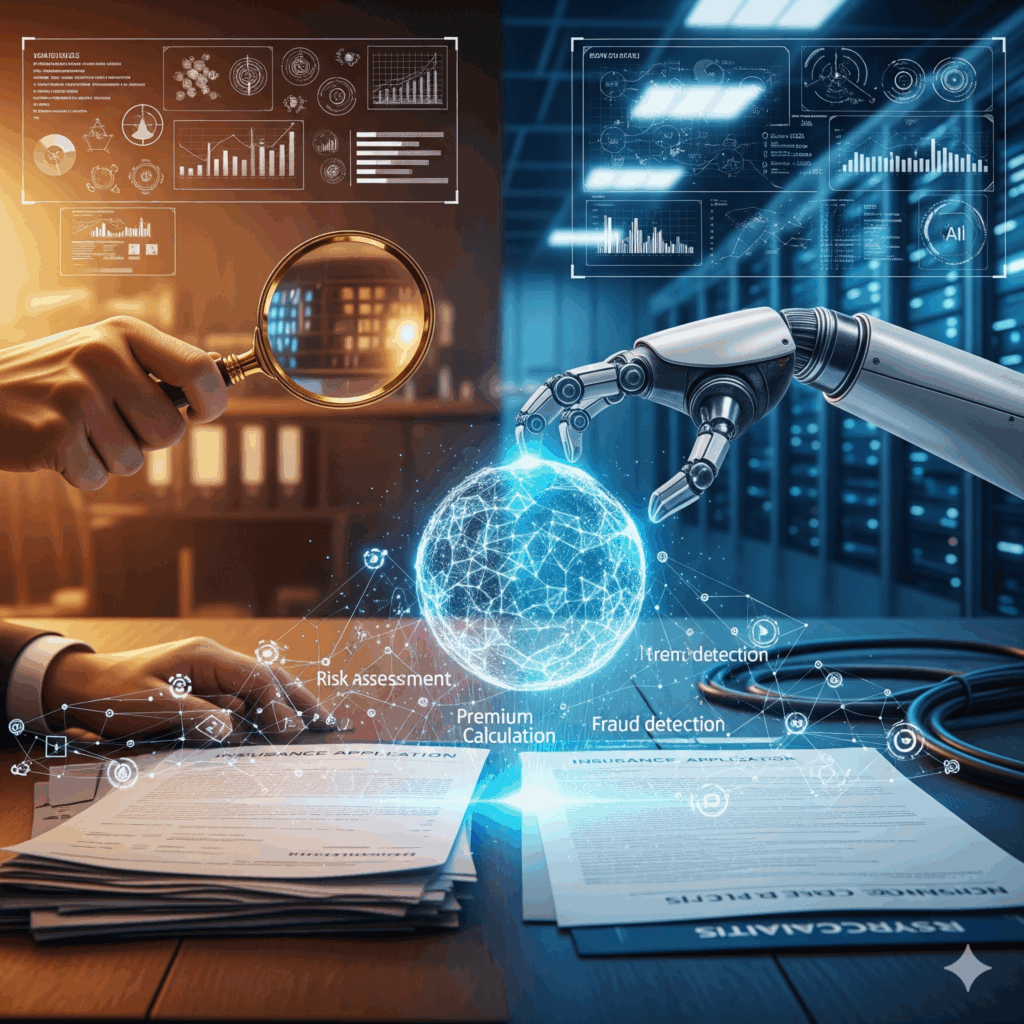

How AI Is Already Being Used in Underwriting

Here are concrete ways AI is changing underwriting, plus what people often get wrong or underestimate.

| Use Case | What AI Does | Benefit | Common Mistake / Myth |

| Risk assessment and scoring | AI systems pull in diverse data claims history, external data (weather, location, lifestyle, even satellite imagery) to build predictive models. | More accurate risk classification, potential to offer more tailored pricing. | Myth: more data = better model. Overfitting, poor quality data, or bias can undo gains. |

| Document processing / data capture | OCR, NLP, generative AI can extract relevant info, prefill applications, check missing documents etc. | Saves time, reduces manual errors, quicker turnaround. | Mistake: neglecting human in the loop. AI misreads, messy documents, or odd edge cases require human oversight. |

| Automated triage & underwriting workflow | AI can decide which applications are low-risk (so auto-approve), which need more review, route them accordingly. | Improves efficiency, underwriters can focus on complex cases. Faster decisions. | Myth: full automation is always best. Sometimes you need nuance, judgement, especially in borderline or unusual cases. |

| Use of non-traditional data | Telemetry (telematics in auto), remote sensors, satellite images, wellness trackers, financial behavior data. | Can improve prediction of risk, detect emerging hazards (e.g. climate, disaster risk). More personalized pricing. | Mistake: data privacy & bias. Also regulatory risk if you use data that’s unfair or non-transparent. |

| Generative AI / Agentic AI in underwriting | Drafting communications, summarizing risk reports, producing policy conditions, checking against regulatory rules. AI agents can initiate tasks. | Frees up human time, increases consistency, perhaps improves customer experience. | Myth: generative AI is perfect. Risk of hallucination, legal/regulatory missteps, or overreliance. |

What AI Adds That Traditional Underwriting Doesn’t

Here’s what AI can bring to the table that many older systems can’t:

- Speed: from days or weeks to hours or minutes.

- Scalability: handle thousands of applications, variety of data sources.

- Continuously learning models: models that improve as more data comes in.

- Insight into new risk factors: climate risk, behavioral data, geographic patterns.

- Consistency & fairness (if done right): less subjectivity, fewer human mistakes.

Key Challenges, Risks & Ethical Issues

No magic wand. To use AI well in underwriting, organizations must manage:

- Bias / fairness: AI reflects data it is trained on. If that data has historical discrimination, AI may perpetuate or amplify it.

- Transparency & explainability: Underwriters, applicants and regulators want to know why a decision was made. Black-box models can be hard to justify.

- Regulation & compliance: Different jurisdictions have different rules. Some data may be restricted (health, personal data, etc.). Policy oversight needed.

- Data quality & availability: AI is only as good as its data. Missing, inaccurate, or biased data degrade performance.

- Overreliance / automation risk: Automation helps, but in unusual or edge cases, you still need human judgement. Neglecting this can lead to wrong decisions or legal risk.

How To Implement AI in Underwriting A Checklist

If you’re working in an insurer or lending organization and planning to adopt AI in underwriting, consider this checklist:

- Define goals clearly

- Speed (turnaround time) improvement?

- Risk accuracy?

- Cost reduction?

- Better customer experience or personalization?

- Data audit

- Inventory available data sources (internal, external).

- Check for missing, biased, or poor-quality data.

- Ensure compliance with privacy/regulations.

- Model design & governance

- Choose what kind of AI: predictive models, rule-based, generative, agentic.

- Plan for explainability (so decisions can be understood).

- Regularly test for bias.

- Include human-in-the-loop for reviews.

- Integrate into workflows

- Prefill systems, document intake, routing / triage, approvals.

- Make sure underwriters are part of design to adopt and trust system.

- Regulatory checks & ethical review

- Consult legal & compliance teams.

- Prepare for audits, disclosures.

- Be clear about what data you use and why.

- Continuous monitoring & improvement

- Track performance metrics: accuracy, false positive/negative rates, time saved, customer satisfaction.

- Watch for drift (when model becomes less accurate over time).

- Update models, retrain, adjust thresholds as needed.

What the Future Looks Like

What’s coming next in AI underwriting:

- Agentic AI taking over more complex underwriting tasks, not just support tasks.

- More use of non-traditional data like climate risk, sensor/IoT data, satellite imaging, lifestyle data.

- Better tools for explainability and fairness, driven by regulations and consumer expectations.

- Hybrid models: human + AI doing what each does best. Humans for nuance, judgment; AI for scale, pattern recognition.

Common Myths vs Reality

- Myth: AI will replace underwriters entirely. Reality: more likely to assist, automate routine parts, but complex decisions still require humans.

- Myth: More data always equals better decisions. Reality: quality, relevance, bias matter more.

- Myth: AI decisions are objective by default. Reality: AI inherits biases in data and design; needs active guardrails.

Case Snapshot: How One Insurer Did It (Short Anecdote)

An insurer (let’s call them “FutureCover”) wanted to reduce health insurance underwriting time from 10 days to under 48 hours. They brought in an AI module that could:

- Extract medical history from records via NLP

- Pull in external wellness/lifestyle data (with consent)

- Score risk automatically for standard cases

- Route complex / high-risk cases to senior underwriters

Result: standard applications now get priced and issued in ~24 hours, freeing underwriters to focus heavy cases. Claim denials dropped because data was more complete. But they also discovered some biases for example, wellness data skewed toward people who engage with digital health tools, so they had to adjust for that.

What It Means for You (If You’re in It, Buying It, or Regulating It)

If you aren’t already thinking about AI in underwriting, you should start. Whether you’re an insurer, lender, vendor, or regulator, here’s what to consider:

- For organizations: AI is a competitive advantage. But implementing poorly can backfire (bias, regulatory trouble, customer distrust). Invest thoughtfully.

- For customers/prospective insured/borrowers: expect faster responses, maybe more personalized pricing but ask how your data is used, whether AI models are explainable, and whether there’s appeal/override for mistakes.

- For regulators: need to set rules around fairness, transparency, acceptable data sources, audit trails.

Final Thoughts

AI in underwriting is more than just a productivity hack it’s a chance to reinvent part of what underwriting should do: better risk insights, faster decisions, more fairness. But it isn’t magic. Getting it right means balancing automation with judgment, data with ethics, speed with reliability.